AI & diffusion models: artistic, scientific, and industrial revolution tomorrow?

Artificial intelligence has always distinguished itself by its ability to suddenly break down technical barriers that were once thought immovable. An overview of the new developments made possible by AI.

Article written by Eric Debeir, lead data scientist

Summary

Diffusion models?

You've probably heard about one of these recent revolutions, with the generation of stunning images from descriptive phrases. If not, here's a quick catch-up: for the past few months, it has been possible through numerous tools to create new images using neural networks... Here are a few examples :

- Prompt: A full shot of a cute magical monster cryptid wearing a dress made of opals and tentacles. chibi. subsurface scattering. translucent skin. caustics. prismatic light. defined facial features, symmetrical facial features. opalescent surface. soft lighting. beautiful lighting. by giger and ruan jia and artgerm and wlop and william-adolphe bouguereau and loish and lisa frank. sailor moon. trending on artstation, featured on pixiv, award winning, sharp, details, intricate details, realistic, hyper-detailed, hd, hdr, 4k, 8k.

- The war by Robert Capa. Prompt : Complex 3 d render of a beautiful porcelain cyberpunk robot ai face, beautiful eyes. red gold and black, fractal veins. dragon cyborg, 1 5 0 mm, beautiful natural soft light, rim light, gold fractal details, fine lace, mandelbot fractal, anatomical, glass, facial muscles, elegant, ultra detailed, metallic armor, octane render, depth of field

- Prompt: Complex 3 d render of a beautiful porcelain cyberpunk robot ai face, beautiful eyes. red gold and black, fractal veins. dragon cyborg, 1 5 0 mm, beautiful natural soft light, rim light, gold fractal details, fine lace, mandelbot fractal, anatomical, glass, facial muscles, elegant, ultra detailed, metallic armor, octane render, depth of field

These examples were randomly selected from the excellent site : https://lexica.art/

Many research projects have tackled this subject of image generation : Google's Imagen, DALL.E. But it's a more recent work that has revolutionized the Internet, notably because the models were, this time, freely accessible under an Open-Source license: the Latent Diffusion Models. Since the release of this tool, a huge debate has emerged within the illustrator community :

- Can these models be seen as tools for artistic creation? Instinctively, no, and yet, we're only scratching the surface of their possible uses...

- What about the competition tomorrow between illustrators who will spend a considerable amount of time on their work, and the use of an AI model? Indeed, it's highly likely that in many cases, clients won't necessarily appreciate the added value of genuine artistic creation.

- Given that these models have been trained on a massive database of images mostly comprised of works by real artists, how should we consider the model's results, especially when users don't hesitate to use the names of artists still active ( https://huggingface.co/spaces/stabilityai/stable-diffusion/discussions/731 or https://huggingface.co/spaces/stabilityai/stable-diffusion/discussions/688) ? ) ?

Emergence of diffusion models

Beyond these debates, from a technical standpoint, we can observe the emergence of a new family of artificial intelligence tools"diffusion models").

This family of tools has already shown remarkable results in image learning.

Let's explore together, at a high level, the particularity of these tools, how they can be applied to other problems, and the general opportunities brought by these models. However, it's undeniable that the scientific community in Deep Learning has widely embraced this topic with an explosion of research.

For example, the research teams at NVIDIA (leader (undisputed leader in generative models) recently proposed a publication that was recognized as an "outstanding paper" at the prestigious NEURIPS 2022, publication , where they delve into these models and offer a better understanding.

Beyond images: audio, 3D, human motion...

Let's start there. While the application to images has been widely spread on social media, it would be very limited to stop there. Indeed, we are facing a new architecture that already applies to many other domains, and tomorrow could apply to specific problems in a business context.

The application to images first is easily explained, and it's a common phenomenon in Deep LearningThe data is much easier to obtain, numerous applications exist, and we are much more tolerant of marginal errors in a generated image than we would be with generated music.

That being said, we have already seen very different and exciting applications.s

New applications

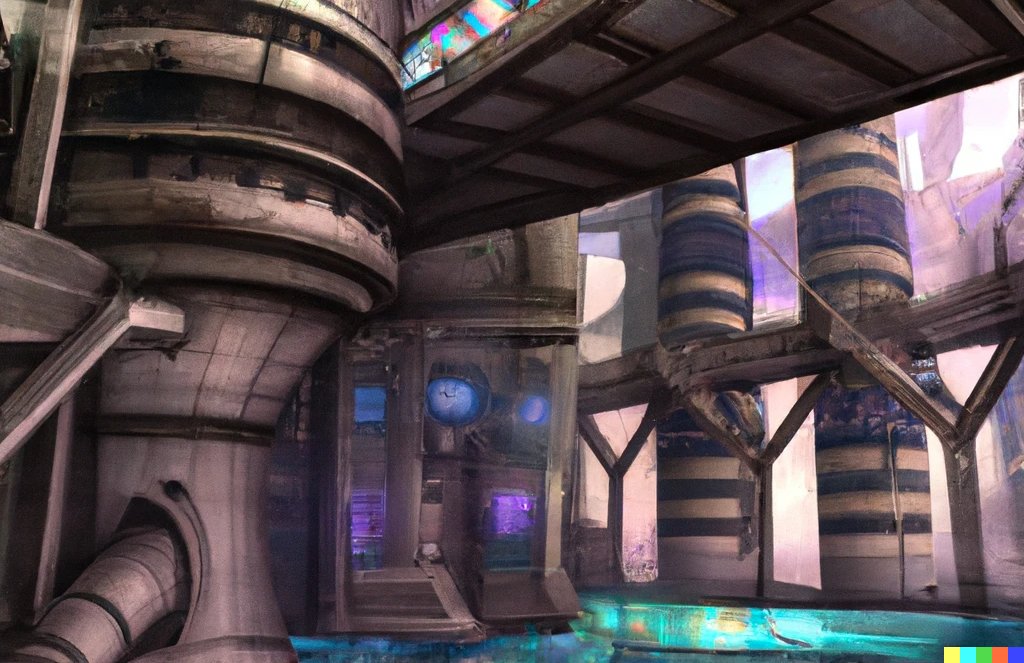

“Multi-instrument Music Synthesis with Spectrogram Diffusionis an application to music, where the diffusion model generates music excerpts with multiple instruments, directly working on spectrograms. A conditioning mechanism allows for continuity in a musical piece. This work is not yet impressive in terms of auditory quality, so we're not at the level of image generation where a casual observer could easily believe the work was generated by a human.

Nevertheless, it's clear that things are progressing rapidly. The most recent interesting work in music generation was OpenAI's Jukebox which was already very promising. The more recent publication is the work of Google's Magenta team, specializing in Deep Learning adapted to music, with many exciting works.

Tomorrow, this type of work can be applied to any approach in the audio domain. In fact (we'll discuss this later), such a model not only generates data but approximates its variances and controls them.

Modeling and generating human movements

Another exciting approachHuman Motion Diffusion Models”

It aims to model and generate human movements, particularly for animation purposes. This work aims to easily generate the movement of a 3D model representing an individual, via a simple phrase describing the action to be performed. This work is important because by learning to generate human movement, it implicitly learns to "summarize" or "compress" these movements, and can thus normalize or qualify detected movements.

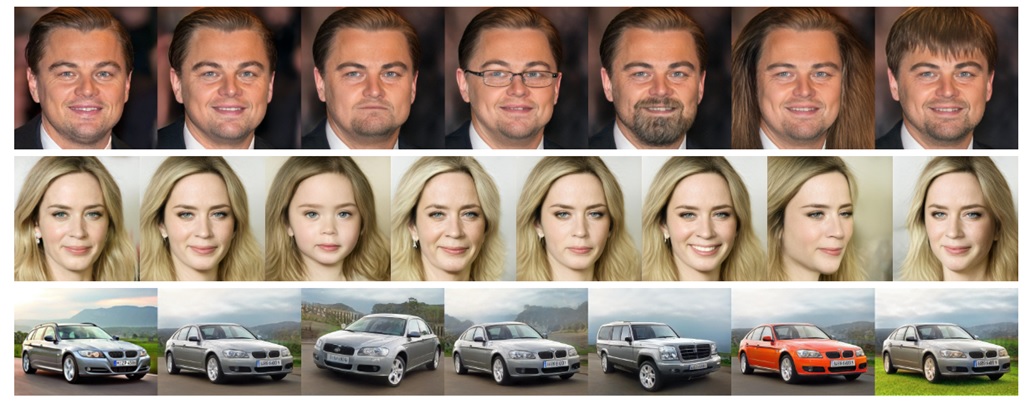

Generation of three-dimensional volumes

Generation of three-dimensional volumes A recent example is the generation of three-dimensional volumes directly from a generation phrase. Several works already exist, and we recommend the work of Google Research and BerkeleyDreamFusion: Text-to-3D using 2D Diffusion”.

Generating three-dimensional volumes is often a necessary step in modeling, and such tools can rapidly supply a processing architecture with new volumes using a very simple generation axis. Furthermore, similarly to image generation models, such a model learns the correspondence between certain terms and their expression in three dimensions, whether it pertains to the subject, style, position, etc. Future work will hopefully allow for better control of this type of generation through a more relevant separation of generation.

And the list grows longer every day. We have recently seen proposals for scientific approaches to localized detection by diffusion models, or even enriching BERT-like language models with this method...

Generative models: for what purposes ?

Brief reminder

Generative models are a family of models in Deep Learning (AI) specialized in data generation. Trained on a dataset composed of numerous elements, these models learn to generate data that is not directly present in the dataset but corresponds to the distribution of the data as represented by this dataset. In other words, such a model aims to learn the general rules specific to all elements of the dataset to successfully generate data that could have been found in this dataset. Mathematically, this is referred to as learning a distribution. Obviously, such a model is highly dependent on the variance of the data present in this dataset.

These models were relegated to science fiction until 2013/2014 with the emergence of two major families of generative models.

First family of generative models

The first family is that of Variational Autoencoders (VAE) by KingmaAt a very high level, these models learn to simplify data to the maximum while learning the diversity (mathematically, the distribution) of this data. They are therefore very valuable tools for approximating data in its complexity, with the possibility of applying many cross-sectional approaches: anomaly detection, clustering, etc. These tools go beyond simple generative models and have notably enabled the creation of AI systems with a notion of uncertainty in predictions.

Second family of generative models

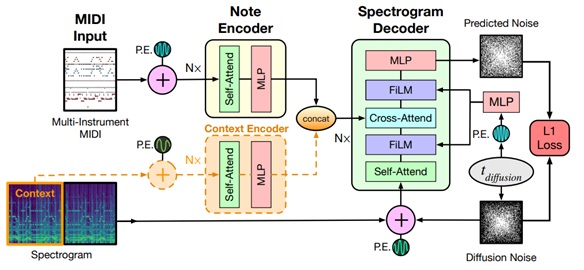

The second, more well-known family, is that of Generative Adversarial Networks (GAN) by Goodfellow. This approach is initially confusing, with a "duel" between a model learning to generate data and another model learning to criticize the generation. However, it easily allows for the creation of high-quality generative models. The famous website “This person does not exist” presents portraits of individuals who do not exist but have been generated by Nvidia's StyleGAN.

Until recently, the consensus was that GANs could yield better results in terms of visual quality, but that VAEs learned the diversity of data much better and thus represented a more powerful tool for working with complex data. Obviously, in Deep Learning, things never stay stable for very long. "VQ-VAEs" have emerged in the last three years, followed by diffusion models.

Interest in business and/or industrial processes

The interest of these tools in business and/or industrial processes should not be underestimated.

A generative model is a valuable tool for all data analysis operations. One could even argue that their ability to generate data is not their main attraction in applied terms, compared to their potential to be used as data exploration and analysis tools.

Approximating the distribution of data means becoming capable of identifying the major variances of this data, alone or combined, and then being able to question any new element against this distribution.

Anomaly detection, data simplification, taking uncertainty into account in a prediction or annotation, or clustering are just the tip of the iceberg. Beyond that, all these models learn to project the data into a much more agreeable space, where simple arithmetic operations result in significant modifications to the data. To perhaps clarify this approach, let's revisit the example of images and Nvidia's StyleGAN generating faces. It becomes possible to finely edit images, not by manipulating the pixels of the image, but by working on the data projection performed by the model :

(from https://github.com/yuval-alaluf/hyperstyle)

Diffusion models are thus more than just a flashy moment reserved for image generation. As generative models, these models have numerous applications, the majority of which have probably not yet been discovered.

Diffusion models: What opportunities lie ahead ?

What are the future interests?

Diffusion models are just beginning to emerge, but we can already contemplate what these new tools will bring us in terms of usage, beyond simple image processing. For this slightly acrobatic exercise, three sources can fuel our reflection :

- 1/ The exploitation of approaches stemming from variational inference (VAE or Normalizing Flows), which have pushed these models beyond simple data generation and are now serving as tools.

- 2/ Observing what the community produces on the Internet from the recent diffusion of the Stable Diffusion model, where new uses regularly appear.

- 3/ We see that this approach is already being applied to other types of data (audio, human motion, three-dimensional volumes), so we can imagine what these new data will bring.

Details of the points mentioned

The first point is undoubtedly the most fundamental but also the most complex to predict. A diffusion model learns to approximate data within its distribution (in the mathematical sense). This implies that it can be used for issues such as anomaly detection (for example, predictive maintenance). The coming months will show us if the academic world manages to produce results on this subject. Obviously, a precautionary principle must be maintained, and we shouldn't use a tool just because it's new and "sexy." Rather, we should question to what extent this new tool could improve our ability to address certain problems. Anomaly detection is a longstanding issue in the field of Machine Learning : it encompasses a wide range of very different topics with varying levels of complexity. A diffusion model offers a unique approach because it allows for iteration on different versions of the data by re-projecting it into the "normal" space that has been learned (via iterations of noise addition or removal). It is likely that some problems could thus be addressed in a new way. It's worth noting that an interest here could be to localize the anomaly in the image more effectively and to expose a distance between this anomaly and a "norm" learned by the model.

The second point is less scientific but should not be ignored. There is as much potential for innovation in a fundamental discovery as in the exploration of new uses. Simply tracking the experiments carried out with Stable Diffusion shows new approaches every week. For example, while everyone knows that an image can be generated from text, few know that it is also possible to generate an image by defining the elements that should appear in a global manner, via localization rectangles (a scheme derived from Rombach et al) :) :

Or even through a "sketch" in solid colors:

One final example is "inpainting," where we remove a part of the image and ask the diffusion model to regenerate the missing part. It will do so while respecting the remaining visible parts of the image, producing a "believable" image by generating the missing part :

Therefore, we are dealing with a highly versatile tool. Furthermore, the conditioning mechanism (which allows learning a link between input and generated image) is relatively flexible and is ready to be adapted to new concepts.

These tools go beyond simple generation to perform domain conversion, with numerous applications. Faced with a specific type of data modeling a business or industrial problem, we can map this data and modify it in a stunning way by conditioning it to simpler information.

In conclusion on the last point, we observe that diffusion models are being applied to various types of data (audio, image, text, etc.). Therefore, we can already see that many types of signals, more or less complex, could undergo the same type of application.

However, most industrial systems offer monitoring based on numerous sensors, cameras, microphones, whose excessive complexity is a barrier to in-depth analysis.

Deep Learning provides tools to reduce this complexity by minimizing information loss (high-level features in a trained model). Diffusion models offer an innovative approach of this kind. For example, to characterize the noise present in a signal and decide whether this noise is external to the studied system or, conversely, whether this noise is a new component indicating a significant problem, diffusion models could be a very relevant tool as they precisely learn to add or remove noise from the data...

Moreover, these approaches also allow creating correspondences between different types of information. It was by combining learning on text and learning on images that OpenAI created DALL-E. Therefore, we can hope to have tools that convert information into another, for example, by transforming a temporal signal of mechanical functioning into an explanatory text of this good functioning. At this stage, it is high time to experiment while eagerly awaiting the next scientific developments.

Bien sûr, at Kickmaker, we are closely monitoring these topics and already experimenting to be able to offer you the best possible solutions tomorrow, combining the innovative quality of these works with our engineering implementation rigor. Indeed, beyond the scientific revolution, our challenge is to turn these trials into usable and controllable tools. Follow us, and if you want to delve a little deeper, let's discuss it! 2023 will be an exceptional year by following these application opportunities.

Article written by Eric Debeir, lead data scientist